List crawer – List Crawler: Uncover the power of efficiently extracting data from lists on websites. This guide delves into the intricacies of list crawling, exploring techniques, ethical considerations, and practical applications. We’ll journey through the process, from defining what a list crawler is and its various functionalities, to mastering data extraction methods and optimizing performance for efficient data retrieval.

We will examine different types of lists, compare various data extraction techniques like regular expressions and DOM parsing, and address crucial aspects such as error handling, ethical considerations, and legal compliance. We will also cover advanced techniques for handling dynamic content and optimizing performance for faster and more efficient data retrieval. The practical applications and real-world scenarios presented will showcase the versatility and importance of list crawlers across diverse industries.

List Crawlers: A Comprehensive Guide: List Crawer

List crawlers are automated programs designed to efficiently extract data from lists found on websites. This guide provides a detailed exploration of list crawlers, encompassing their definition, functionality, data extraction techniques, error handling, ethical considerations, applications, advanced techniques, and performance optimization.

Defining “List Crawler”

A list crawler is a type of web scraper specifically designed to identify and extract data from lists presented in various formats on web pages. Its primary purpose is to systematically collect and process list-based information, converting unstructured web data into structured, usable formats for analysis or other applications. These lists can range from simple ordered or unordered lists to complex nested structures.

List crawlers function by navigating through a website’s HTML structure, identifying list elements (typically `

- `, `

- ` tags), and extracting the data contained within those elements. They target various list types including ordered lists (numbered), unordered lists (bulleted), and nested lists (lists within lists). The extracted data can then be stored in a structured format like a CSV file, a database, or other data structures suitable for further processing.

A simple flowchart illustrating a list crawler’s operation would involve these steps: 1. Identify Target URL; 2. Fetch Web Page; 3. Parse HTML; 4. Locate List Elements; 5. Extract List Items; 6. Clean and Format Data; 7. Store Data; 8. Handle Errors; 9. Repeat for other URLs (if applicable).

Data Extraction Techniques, List crawer

Several methods facilitate data extraction from lists. Regular expressions and DOM parsing are prominent techniques. Regular expressions offer a flexible approach for pattern matching within text, while DOM parsing leverages the website’s Document Object Model for structured data extraction. Python provides libraries like `Beautiful Soup` and `lxml` for efficient DOM parsing, and its `re` module for regular expressions.

Regular expressions are powerful for extracting data based on patterns, but can be less robust when dealing with variations in HTML structure. DOM parsing, on the other hand, directly interacts with the HTML structure, offering a more robust approach, especially for complex lists, but can be more complex to implement.

Python’s capabilities in handling diverse list formats are demonstrated below. The example utilizes Beautiful Soup for DOM parsing.

Technique Pros Cons Example Code Snippet (Python) Regular Expressions Flexible, efficient for simple patterns Can be fragile with varying HTML structures, less readable for complex patterns import re; text = "- Item 1

- Item 2

"; items = re.findall(r"

Examine how ohlq can boost performance in your area.

- (.*?)

", text)

DOM Parsing (Beautiful Soup) Robust, handles complex HTML structures well Can be more complex to implement than regular expressions from bs4 import BeautifulSoup; soup = BeautifulSoup(html, 'html.parser'); items = [li.text for li in soup.find_all('li')]Handling Errors and Edge Cases

List crawling often encounters errors such as malformed HTML, missing data, broken links, or unavailable data sources. Robust error handling is crucial. Strategies include implementing try-except blocks to catch exceptions, using HTTP status codes to identify broken links, and employing retry mechanisms for temporary network issues. Comprehensive logging helps track errors and facilitates debugging.

Best practices for robust list crawling include: 1. Implement comprehensive error handling; 2. Use HTTP status codes to detect problems; 3. Employ retry mechanisms for transient errors; 4. Implement rate limiting to avoid overloading servers; 5.

Log all errors and exceptions for debugging.

Ethical Considerations and Legal Compliance

Ethical web scraping and list crawling require adherence to robots.txt directives, website terms of service, and respect for website owners’ wishes. Ignoring these guidelines can lead to legal repercussions, including website blocking, legal action, and reputational damage. Responsible crawling involves respecting website policies, avoiding overloading servers, and only accessing publicly available data.

Guidelines for responsible list crawling include: 1. Always check robots.txt; 2. Adhere to website terms of service; 3. Respect website owners’ wishes; 4. Implement rate limiting; 5.

Avoid overloading servers; 6. Only access publicly available data.

Applications of List Crawlers

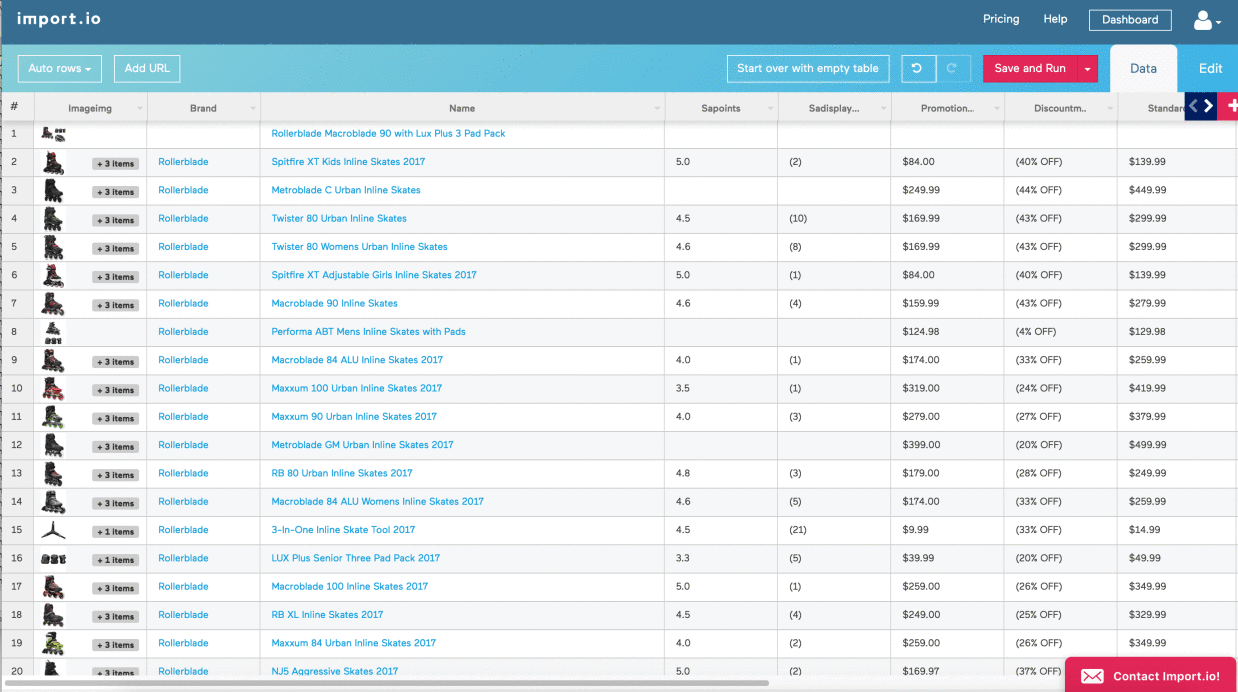

List crawlers have numerous applications, including price comparison websites, data aggregation platforms, and market research tools. They are beneficial in scenarios requiring systematic data collection from multiple sources. Industries such as e-commerce, finance, and market research heavily rely on list crawlers for data-driven decision-making.

- E-commerce (price comparison, product aggregation)

- Finance (market data aggregation, financial news scraping)

- Real Estate (property listings, market analysis)

- Market Research (competitive analysis, consumer sentiment analysis)

A real-world example is a price comparison website that uses a list crawler to collect pricing data from various online retailers. This allows the website to present consumers with a comprehensive overview of prices, enabling informed purchasing decisions.

Advanced List Crawling Techniques

Handling dynamic content and JavaScript rendering requires advanced techniques such as using headless browsers (like Selenium or Playwright) to render JavaScript and extract data from the fully rendered page. Pagination in large lists can be handled using techniques like detecting “next” buttons or analyzing URL patterns to automatically navigate through pages.

Efficient data management involves using databases (like SQL or NoSQL) or other structured storage mechanisms to organize and store the extracted data. This allows for easy retrieval and analysis of the collected information.

Performance Optimization

Factors affecting list crawler performance include network latency, server response time, and the complexity of the target website’s structure. Optimization strategies involve techniques such as efficient HTTP requests, asynchronous programming, and caching mechanisms. Minimizing resource consumption focuses on efficient data processing and memory management.

A step-by-step guide to improving performance might involve: 1. Optimize HTTP requests; 2. Implement asynchronous operations; 3. Use caching to reduce redundant requests; 4. Efficiently process and store data; 5.

Monitor resource usage and identify bottlenecks.

Mastering list crawling opens doors to efficient data acquisition and analysis. From understanding the fundamental principles to implementing advanced techniques, this guide equips you with the knowledge and strategies to leverage the power of list crawlers responsibly and effectively. By adhering to ethical guidelines and optimizing performance, you can harness this valuable tool for a wide array of applications, unlocking valuable insights from web data.

Quick FAQs

What are the legal risks associated with list crawling?

Ignoring robots.txt directives, violating terms of service, or scraping copyrighted data can lead to legal action, including cease-and-desist letters or lawsuits.

How can I avoid overloading a website while list crawling?

Implement delays between requests, respect robots.txt rules, and use polite scraping techniques to avoid overwhelming the server. Consider using a rotating proxy system to further reduce the load on any single IP address.

What is the best programming language for building a list crawler?

Python is a popular choice due to its extensive libraries (like Beautiful Soup and Scrapy) designed for web scraping and data extraction.

How do I handle lists with dynamic content loaded via JavaScript?

Use a headless browser like Selenium or Playwright to render the JavaScript and extract data from the fully loaded page.

- `, or `