List clawer – List Crawler: Unlocking the power of web data extraction, this comprehensive guide delves into the world of list crawlers, exploring their design, implementation, ethical considerations, and diverse applications. We’ll examine various techniques for efficiently extracting data from online lists, addressing challenges such as pagination and dynamic content. This exploration will cover the technical aspects of building a list crawler using Python, alongside crucial ethical and legal considerations to ensure responsible data acquisition.

From understanding the fundamental architecture of a list crawler to mastering advanced techniques for handling complex websites and circumventing anti-scraping measures, this guide provides a complete roadmap for navigating the intricacies of web data extraction. We will cover a range of use cases, highlighting the benefits and limitations of list crawlers in various fields, including e-commerce, market research, and competitive analysis.

List Crawlers: A Comprehensive Guide: List Clawer

List crawlers are automated programs designed to efficiently extract data from lists found on websites. This guide provides a comprehensive overview of list crawlers, covering their definition, functionality, ethical considerations, implementation, and advanced techniques.

Defining “List Crawler”

A list crawler is a type of web crawler specifically designed to extract data from lists presented on websites. These lists can take various forms, including ordered lists, unordered lists, tables, and other structured data formats. List crawlers differ from general web crawlers in their targeted approach; they focus solely on extracting data from list-like structures, often ignoring other content on the page.

Different types of list crawlers exist, categorized by their target and functionality. For instance, some focus on extracting product information from e-commerce sites, while others might target contact details from business directories. The architecture typically includes components for URL fetching, HTML parsing, data extraction, and storage.

List Crawler Architecture

A typical list crawler architecture comprises several key components working in concert. These include a URL scheduler to manage the list of URLs to visit, a web fetcher to retrieve the HTML content of each URL, an HTML parser to analyze the structure of the fetched pages, a data extractor to isolate the desired information from the lists, and a data storage mechanism (e.g., a database) to save the extracted data.

Effective error handling and exception management are also crucial components to ensure robustness.

List Crawler Process Flowchart

A simplified flowchart of a list crawler’s process would begin with initializing the crawler with a starting URL. The crawler then fetches the URL’s content, parses the HTML to identify lists, extracts the desired data from those lists, stores the extracted data, and then proceeds to the next URL in the queue, repeating this process until all URLs are processed or a termination condition is met.

Error handling is integrated throughout the process to manage unexpected situations, such as broken links or server errors. The process continues iteratively, processing each URL and its associated list data.

Data Extraction Techniques Used by List Crawlers

Efficient data extraction is crucial for list crawlers. Several methods are employed, each with its strengths and weaknesses. Regular expressions offer a powerful, flexible approach for pattern matching within text, but can become complex for intricate list structures. HTML parsing libraries like Beautiful Soup provide a more structured approach, enabling navigation of the HTML DOM tree and extraction of data based on tags and attributes.

XPath, a query language for XML and HTML, allows for precise targeting of specific elements within a document, making it ideal for complex list structures. However, challenges arise with dynamically generated lists, requiring techniques like JavaScript rendering or Selenium.

XPath for Data Extraction

XPath excels at extracting data from lists. For example, to extract all product names from a list of products with `

You also can investigate more thoroughly about craigslist shreveport to enhance your awareness in the field of craigslist shreveport.

Handling Pagination

Many lists span multiple pages. To handle pagination, the crawler needs to identify pagination links (e.g., “Next Page,” “Page 2”) and dynamically generate URLs for subsequent pages. This often involves analyzing the structure of pagination links and constructing the URLs programmatically. Regular expressions or HTML parsing can be employed to identify and extract the necessary information for generating the URLs for the subsequent pages.

Ethical Considerations and Legal Aspects

Responsible use of list crawlers is paramount. Respecting website terms of service is crucial; many sites explicitly prohibit scraping. Data privacy regulations (e.g., GDPR) must also be adhered to. Ignoring these guidelines can lead to legal action or account suspension.

Best Practices for Responsible List Crawling

- Always check the website’s

robots.txtfile for crawling restrictions. - Implement rate limiting to avoid overloading the target website’s server.

- Respect the website’s terms of service and privacy policy.

- Avoid aggressive scraping techniques that could disrupt website functionality.

- Consider using proxies and rotating user agents to mask your IP address.

Website Terms of Service Comparison

| Website | Data Scraping Policy | Rate Limits | Consequences of Violation |

|---|---|---|---|

| Example Website A | Prohibited | N/A | Account suspension |

| Example Website B | Allowed with restrictions | 1 request/second | IP blocking |

| Example Website C | Unspecified | N/A | Potential legal action |

| Example Website D | Allowed for research purposes only | N/A | Account suspension |

Implementation and Development using Python

Python, with libraries like Beautiful Soup and Scrapy, is well-suited for list crawler development. A basic crawler would involve fetching URLs using libraries like requests, parsing HTML using Beautiful Soup, extracting data using XPath or CSS selectors, and storing data in a database (e.g., using SQLite or a similar database). Modular design enhances maintainability and scalability. Robust error handling is essential to manage network issues, parsing errors, and other exceptions.

Python Code Snippet (Illustrative), List clawer

This snippet demonstrates basic URL fetching and HTML parsing using requests and Beautiful Soup:

import requestsfrom bs4 import BeautifulSoupurl = "example.com/list"response = requests.get(url)soup = BeautifulSoup(response.content, "html.parser")#Further data extraction logic here using soup object.

Modular Structure

A well-structured list crawler would separate concerns into modules for URL management, web fetching, HTML parsing, data extraction, data storage, and error handling. This improves code readability, maintainability, and testability.

Error Handling and Exception Management

Error handling is crucial. try...except blocks should handle potential exceptions like network errors ( requests.exceptions.RequestException), parsing errors, and database errors. Logging errors is also vital for debugging and monitoring.

Applications and Use Cases of List Crawlers

List crawlers find applications across numerous domains. E-commerce businesses use them for price comparison and competitive analysis. Researchers leverage them to gather data for academic studies. Market analysts utilize them for trend identification and market research. Lead generation and competitive intelligence are other key applications.

Real-World Applications

- Price Comparison: Crawling e-commerce websites to compare prices of products.

- Lead Generation: Extracting contact details from business directories.

- Competitive Intelligence: Gathering data on competitors’ products and pricing.

- Market Research: Analyzing trends and patterns in online markets.

- Academic Research: Collecting data for research papers and studies.

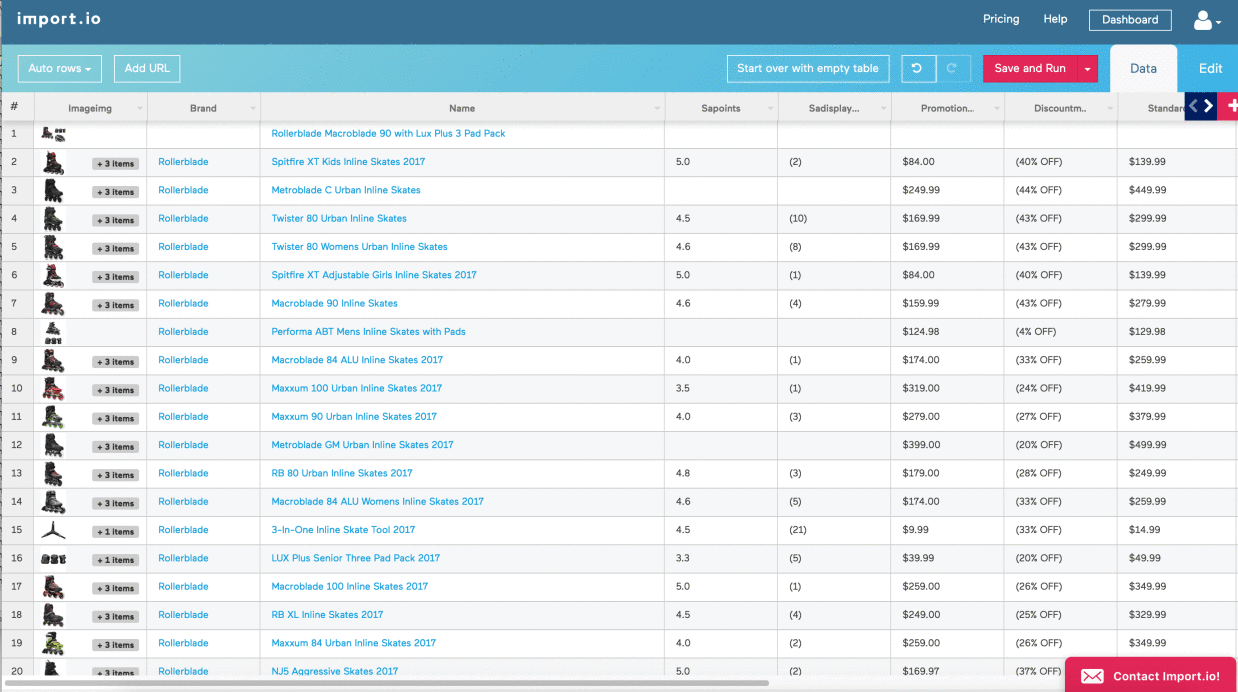

Building a Product Database

A list crawler can be used to build a database of product information by systematically crawling e-commerce websites, extracting product names, descriptions, prices, and other relevant attributes. The extracted data can then be stored in a structured database for further analysis and use.

Advanced Techniques and Challenges

Handling dynamic websites and JavaScript rendering presents significant challenges. Techniques like Selenium or Playwright can render JavaScript, allowing access to data otherwise unavailable. At scale, challenges include CAPTCHAs, IP blocking, and anti-scraping measures. Strategies for overcoming these include using proxies, rotating user agents, and implementing delays between requests.

Overcoming Challenges

- CAPTCHAs: Implement CAPTCHA-solving services or employ techniques to avoid triggering CAPTCHAs.

- IP Blocking: Use proxies and rotating user agents to mask your IP address.

- Anti-scraping Measures: Analyze the website’s anti-scraping techniques and develop strategies to circumvent them (within ethical and legal boundaries).

Proxies and Rotating User Agents

Using proxies and rotating user agents helps to mask your IP address and mimic human browsing behavior, reducing the likelihood of being blocked by the target website. This improves the robustness and longevity of your list crawler.

Mastering the art of list crawling empowers you to harness the vast potential of online data. By understanding the technical intricacies, ethical considerations, and legal implications, you can responsibly leverage list crawlers for a wide array of applications. This guide has equipped you with the knowledge to build efficient and robust crawlers, while adhering to best practices and respecting website terms of service.

Remember that responsible data extraction is paramount; always prioritize ethical considerations and respect website owners’ wishes. The power of data is immense, and its responsible use is crucial for a sustainable digital future.

General Inquiries

What are the limitations of list crawlers?

List crawlers can be hindered by dynamic websites that rely heavily on JavaScript, anti-scraping measures, CAPTCHAs, and IP blocking. They may also struggle with poorly structured or inconsistent data formats.

How can I avoid getting blocked by websites?

Respect robots.txt, implement rate limiting to avoid overwhelming servers, use rotating user agents to mimic human browsing behavior, and consider using proxies to mask your IP address.

What legal issues should I be aware of?

Always review a website’s terms of service regarding data scraping. Respect copyright laws and avoid collecting personally identifiable information without consent. Unauthorized scraping can lead to legal action.

What are some alternative methods to list crawlers?

APIs (Application Programming Interfaces) provided by websites often offer a more reliable and ethical way to access data. If an API is unavailable, consider using web browser automation tools, although these are generally slower and more resource-intensive.