List crawler, a powerful tool for extracting data from websites, offers a wealth of applications across diverse fields. This guide delves into the functionality, applications, technical aspects, ethical considerations, and advanced techniques associated with list crawlers, providing a comprehensive understanding of this valuable web scraping method. We will explore how list crawlers efficiently target various list types, from simple ordered and unordered lists to complex nested structures, showcasing their utility in e-commerce, data aggregation, and academic research.

The guide also addresses the crucial ethical and legal considerations, offering insights into responsible and compliant usage.

We’ll cover the technical details, including programming languages, libraries, and strategies for handling pagination, dynamic content, and anti-scraping measures. Furthermore, we will explore efficient data handling and pre-analysis techniques to prepare the extracted data for subsequent analysis and interpretation. This comprehensive approach aims to equip readers with the knowledge and skills necessary to effectively utilize list crawlers while adhering to ethical and legal standards.

Understanding List Crawlers

List crawlers are automated programs designed to extract data from lists found on websites. They efficiently collect structured information, providing a powerful tool for various data-driven tasks. This article will explore the functionality, applications, technical aspects, ethical considerations, and advanced techniques associated with list crawlers.

Defining “List Crawler”

A list crawler is a type of web scraper specifically programmed to identify and extract data from lists presented on web pages. These lists can take various forms, influencing how the crawler is designed and implemented.

List Crawler Functionality

The core functionality involves identifying list elements within a webpage’s HTML structure (using tags like <ul>, <ol>, or even custom tags), extracting the items within those lists, and storing them in a structured format. This often includes handling nested lists and pagination across multiple pages.

Types of Lists Targeted by List Crawlers

List crawlers can target several list types, each requiring a slightly different approach to extraction:

- Ordered Lists (<ol>): Lists where items are numbered sequentially.

- Unordered Lists (<ul>): Lists where items are presented with bullet points or other markers.

- Nested Lists: Lists containing other lists within them, requiring recursive parsing.

- Custom Lists: Lists not using standard HTML tags but still exhibiting a list-like structure, requiring more sophisticated parsing techniques.

Examples of Useful Websites for List Crawlers

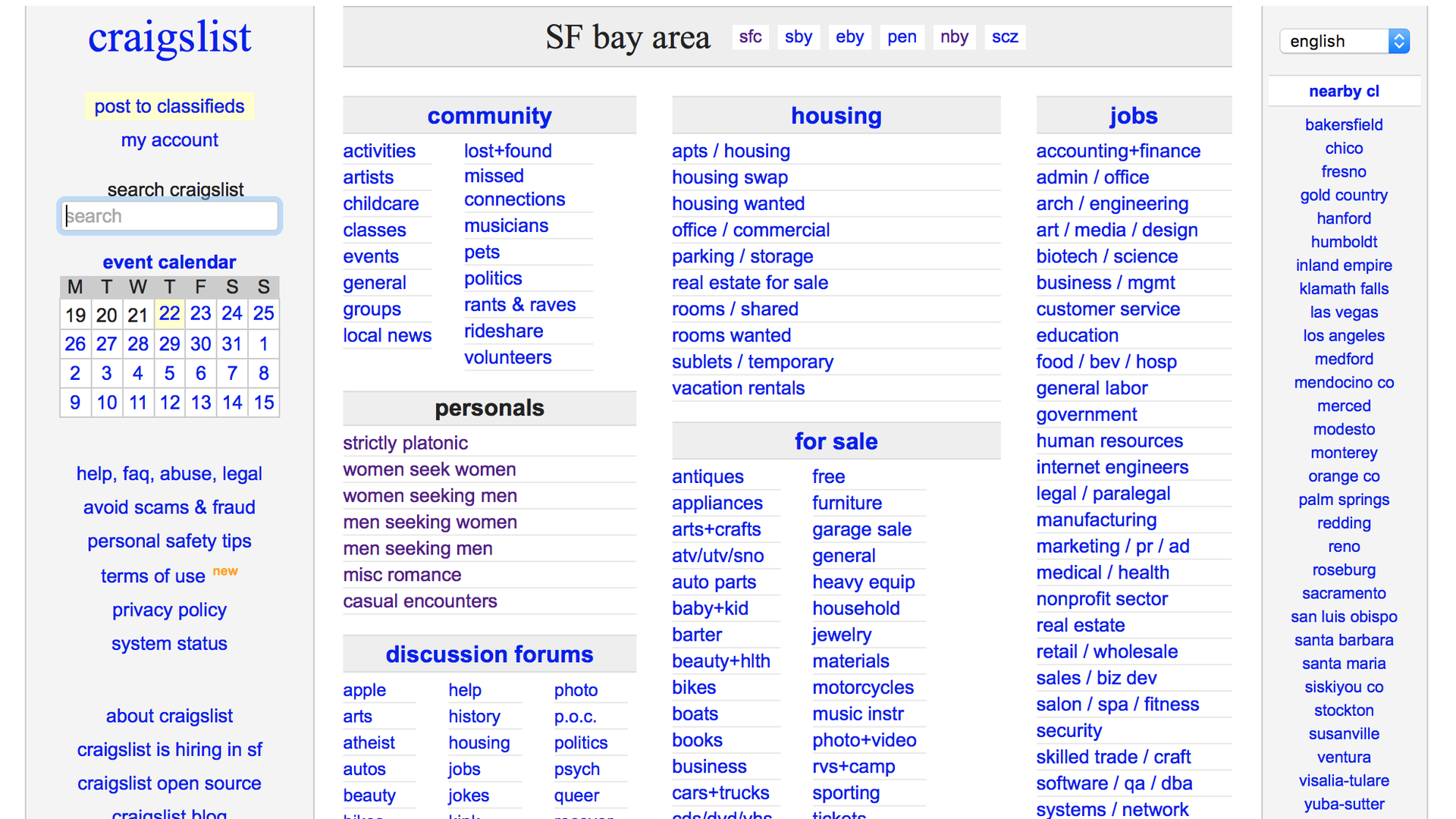

List crawlers are valuable for extracting data from websites containing extensive lists, such as:

- E-commerce sites (product listings, customer reviews)

- News aggregators (article headlines, summaries)

- Research databases (publication lists, author details)

- Real estate portals (property listings, prices)

List Crawler Operational Flowchart

A simplified flowchart illustrating the steps involved in a list crawler’s operation might look like this:

- Identify Target URL: Specify the website or URL containing the desired lists.

- Fetch Webpage: Download the HTML content of the target webpage.

- Parse HTML: Analyze the HTML to locate list elements (<ul>, <ol>, etc.).

- Extract List Items: Extract the text content from each item within the identified lists.

- Handle Pagination: If the list spans multiple pages, follow pagination links to retrieve additional data.

- Store Data: Save the extracted data in a structured format (e.g., CSV, JSON, database).

Applications of List Crawlers

List crawlers find applications across various domains, leveraging their ability to efficiently extract structured data from websites.

List Crawlers in E-commerce

In e-commerce, list crawlers are used to collect product information (prices, descriptions, reviews), competitor pricing data, and customer feedback. This data is crucial for price comparison websites, market research, and inventory management.

List Crawlers in Data Aggregation

List crawlers play a vital role in data aggregation by gathering information from multiple sources and consolidating it into a single, unified dataset. This is particularly useful for creating comprehensive databases or market reports.

List Crawlers in Web Scraping for Research

Researchers use list crawlers to collect data for academic studies, gathering information from various sources such as research publications, scientific databases, and news articles. This facilitates large-scale data analysis and trend identification.

Comparison of List Crawler Applications

| Application | Benefits | Challenges | Example Websites |

|---|---|---|---|

| E-commerce Price Comparison | Real-time price updates, competitive analysis | Website structure changes, anti-scraping measures | Amazon, eBay |

| News Aggregation | Automated news gathering, trend identification | Data cleaning, handling different news formats | Google News, Bing News |

| Scientific Research | Large-scale data collection, literature review | Data standardization, access restrictions | PubMed, arXiv |

| Real Estate Data Analysis | Market trend analysis, property valuation | Data inconsistency, geographical limitations | Zillow, Realtor.com |

Technical Aspects of List Crawlers

Building a list crawler requires understanding various programming concepts and utilizing appropriate tools.

Programming Languages for List Crawlers

Python is a popular choice due to its extensive libraries for web scraping and data manipulation. Other languages like Java, JavaScript (Node.js), and Ruby are also used, but Python’s ease of use and readily available libraries often make it the preferred option.

Web Scraping Libraries for List Crawling

Several libraries simplify the process of extracting data from web pages. Beautiful Soup (Python) is a widely used library for parsing HTML and XML, while Scrapy (Python) provides a more comprehensive framework for building web scrapers.

Handling Pagination in List Crawlers

Many websites spread lists across multiple pages. To handle pagination, list crawlers must identify pagination links (e.g., “Next,” “Previous”) and recursively fetch subsequent pages until the end of the list is reached.

Building a Basic List Crawler in Python

A basic Python list crawler using Beautiful Soup might involve these steps:

- Import Libraries:

import requests, bs4 - Fetch Webpage:

response = requests.get(url) - Parse HTML:

soup = bs4.BeautifulSoup(response.content, "html.parser") - Find Lists:

lists = soup.find_all("ul")(or “ol”) - Extract Items: Iterate through each list and extract item text using

.find_all("li") - Store Data: Write extracted data to a file or database.

Ethical and Legal Considerations

Responsible and ethical use of list crawlers is crucial to avoid legal issues and maintain website integrity.

Ethical Implications of List Crawlers

Ethical concerns include respecting website terms of service, avoiding overloading servers, and not using extracted data for malicious purposes. Responsible crawling prioritizes minimizing the impact on the target website.

Legal Restrictions and Terms of Service

Websites often have terms of service explicitly prohibiting scraping or automated data extraction. Ignoring these terms can lead to legal action. Respecting robots.txt directives is also essential.

Risks Associated with Unauthorized List Crawling

Unauthorized crawling can result in IP bans, legal action from website owners, and potential damage to the target website’s infrastructure. It’s crucial to operate within legal and ethical boundaries.

Responsible and Ethical List Crawler Design

Responsible design includes implementing delays between requests, using polite user-agent strings, and respecting robots.txt rules. Transparency and responsible data usage are also key ethical considerations.

Advanced List Crawling Techniques

Handling dynamic content and overcoming anti-scraping measures are crucial aspects of advanced list crawling.

Handling Dynamic Content Loaded via JavaScript

For websites using JavaScript to load content dynamically, tools like Selenium or Playwright can be used to render the webpage fully before scraping. These tools simulate a browser environment, enabling extraction of dynamically generated lists.

Dealing with CAPTCHAs and Anti-Scraping Measures

Websites employ CAPTCHAs and other anti-scraping techniques to deter automated crawling. Strategies to overcome these include using CAPTCHA-solving services (with ethical considerations) or employing techniques like rotating proxies and user-agent spoofing.

Optimizing List Crawling Speed and Efficiency

Optimizing speed and efficiency involves techniques like asynchronous requests, parallel processing, and efficient data storage. Minimizing network requests and optimizing data parsing algorithms are also important.

Strategies for Avoiding IP Bans

- Use rotating proxies to mask your IP address.

- Implement delays between requests to avoid overwhelming the server.

- Respect robots.txt rules and website terms of service.

- Use a polite user-agent string to identify your crawler.

- Monitor your crawler’s activity for signs of being blocked.

Data Handling and Analysis (Pre-Analysis Stage)

Efficient data handling and preprocessing are essential for successful data analysis.

Methods for Storing and Managing Extracted Data

Extracted data can be stored in various formats, including CSV files, JSON files, or relational databases (like MySQL or PostgreSQL). The choice depends on the size and complexity of the data and the subsequent analysis methods.

Data Cleaning and Preprocessing

Data cleaning involves removing duplicates, handling missing values, and correcting inconsistencies. Preprocessing might include data transformation, normalization, and feature engineering, preparing the data for analysis.

Get the entire information you require about craigslist anchorage on this page.

Data Formats for Storing Extracted List Data

CSV and JSON are common formats for storing extracted list data. CSV is simple and easily readable, while JSON offers more flexibility for complex data structures.

Database Table Schema for Extracted List Data

A sample database schema for storing extracted list data might include columns like:

| Column Name | Data Type | Description |

|---|---|---|

| id | INT (primary key) | Unique identifier for each list item |

| source_url | VARCHAR | URL of the webpage where the data was extracted |

| list_item | TEXT | Text content of the list item |

| extraction_date | TIMESTAMP | Date and time of data extraction |

In conclusion, list crawlers present a powerful yet nuanced tool for data extraction from websites. Understanding their functionality, applications, and ethical implications is crucial for responsible and effective use. By mastering the technical aspects and employing advanced techniques, users can harness the power of list crawlers for various purposes, from enhancing e-commerce operations to facilitating data-driven research. Remember, responsible usage, respecting website terms of service, and prioritizing ethical considerations are paramount in leveraging the benefits of this technology.

FAQ Guide

What are the limitations of a list crawler?

List crawlers may struggle with dynamically loaded content (requiring JavaScript rendering), CAPTCHAs, and websites with robust anti-scraping measures. They also might not be suitable for extracting data from complex, unstructured websites.

How can I avoid getting my IP banned while using a list crawler?

Employ techniques such as using proxies, rotating IP addresses, respecting robots.txt, implementing delays between requests, and limiting the number of requests per unit of time.

What are some alternative methods to list crawling?

Alternatives include using APIs (if available), employing browser automation tools like Selenium or Playwright, or manually copying and pasting data (for small datasets).

What are the best practices for storing extracted list data?

Store data in structured formats like CSV, JSON, or relational databases. Choose a format appropriate for the size and complexity of the data, and consider using version control for data management.