List crowler – List Crawler: This exploration delves into the fascinating world of automated web data extraction, focusing specifically on the techniques and applications of list crawlers. We’ll examine how these tools efficiently gather information from various online lists, the ethical considerations involved, and the practical applications across diverse fields. Understanding list crawlers provides a powerful insight into how we can leverage technology to access and analyze vast amounts of publicly available data.

From defining the functionality of a list crawler and exploring different data extraction methods (regular expressions, DOM parsing) to addressing the crucial ethical and legal aspects of web scraping, this comprehensive overview will equip you with the knowledge needed to navigate this complex landscape responsibly and effectively. We will cover various applications, including e-commerce price comparison and market research, as well as crucial technical implementation aspects and performance optimization strategies.

Finally, we’ll explore how to effectively visualize and present the extracted data for maximum impact.

Defining “List Crawler”: List Crowler

A list crawler is a type of web crawler specifically designed to extract data from lists found on websites. Its functionality revolves around identifying, navigating, and extracting information from various list structures, making it a valuable tool for data collection and analysis.

List Crawler Functionality

List crawlers operate by systematically traversing web pages, identifying lists using HTML tags (e.g., <ul>, <ol>), and extracting the individual items within those lists. This process often involves parsing the HTML structure, identifying list elements, and extracting the text content or other relevant attributes of each item. The extracted data is then typically stored in a structured format, such as a database or spreadsheet, for further processing and analysis.

Types of Lists Targeted by List Crawlers

List crawlers can handle a variety of list types, including ordered lists (<ol>), unordered lists (<ul>), and nested lists (lists within lists). They are designed to accommodate different HTML structures and variations in list formatting. The ability to handle complex nested structures is crucial for extracting data from intricate web pages.

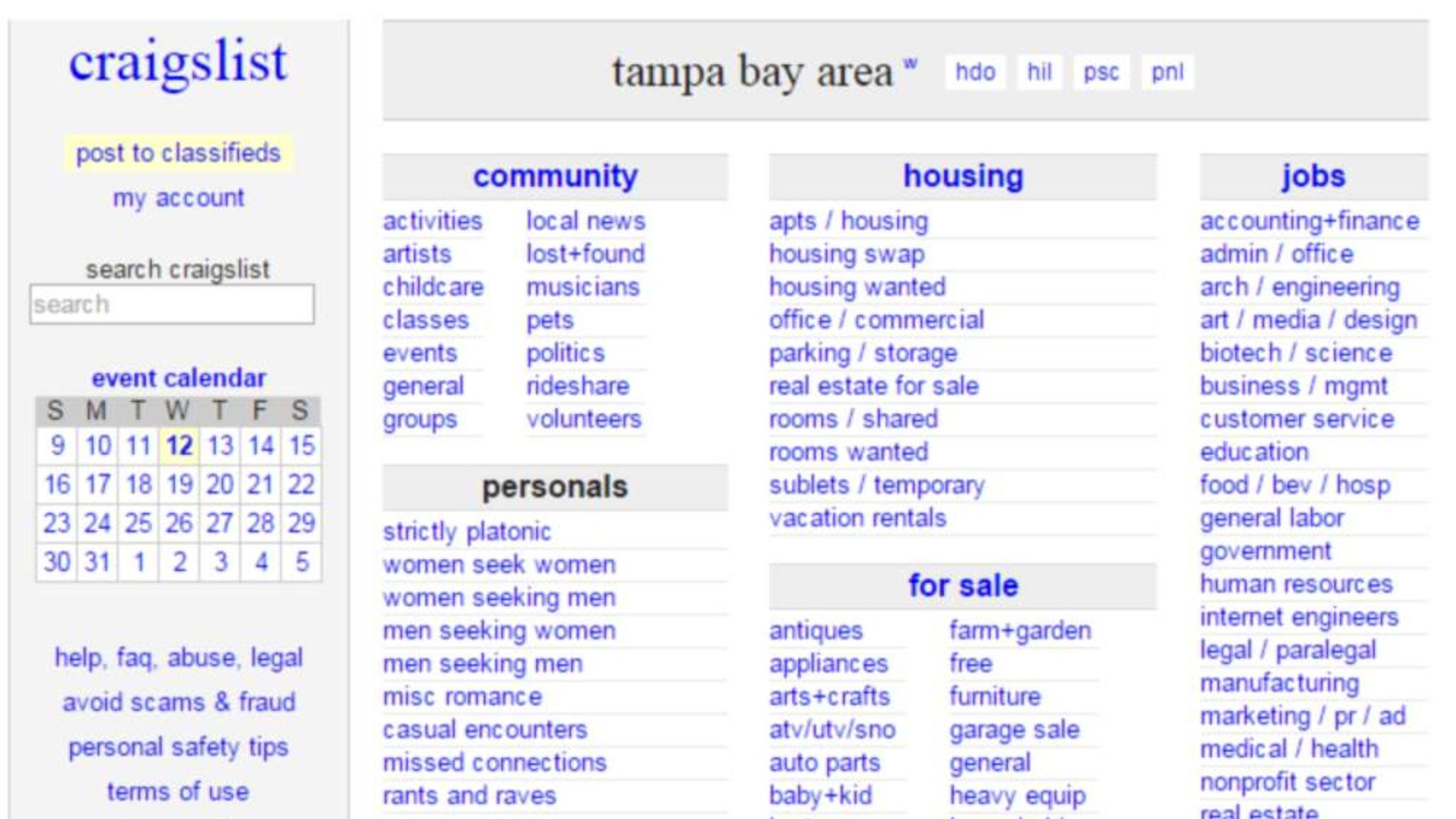

Examples of Websites for Effective List Crawler Use

List crawlers find applications across numerous websites. E-commerce sites (e.g., Amazon, eBay) offer product lists ideal for price comparison and market research. News websites provide article lists useful for sentiment analysis. Job boards (e.g., Indeed, LinkedIn) contain job postings that can be scraped for specific criteria. Academic databases present research papers suitable for meta-analysis.

List Crawler Operation Flowchart

A simplified flowchart for a list crawler would include the following steps: 1. Start; 2. Fetch webpage; 3. Parse HTML; 4. Identify lists; 5.

Extract list items; 6. Store data; 7. Repeat for next page (if pagination exists); 8. End.

Data Extraction Techniques

Efficient and reliable data extraction from lists requires a combination of techniques. The choice of method depends on the complexity of the list structure and the desired level of accuracy.

Comparison of Data Extraction Methods

Regular expressions offer a powerful, albeit sometimes brittle, approach to extracting data based on patterns. DOM parsing provides a more robust method, leveraging the website’s HTML structure for precise extraction. While regular expressions are faster for simple lists, DOM parsing handles complex structures and variations more effectively. Poorly structured lists often require a combination of techniques or pre-processing steps.

Challenges of Extracting Data from Poorly Structured Lists

Inconsistent formatting, missing tags, and unexpected HTML structures pose significant challenges. Robust error handling and flexible parsing strategies are necessary to deal with these inconsistencies. Data cleaning and validation steps are often crucial for ensuring data quality.

Best Practices for Handling Variations in List Formatting

Employing flexible parsing techniques, error handling, and data validation are key. Prioritizing DOM parsing over regular expressions for complex lists improves robustness. Employing techniques like fuzzy matching can improve the handling of variations in data fields. Regularly reviewing and updating the crawler to adapt to changes in website structure is crucial.

Comparison of Data Extraction Method Efficiency and Reliability

| Method | Efficiency | Reliability | Suitability |

|---|---|---|---|

| Regular Expressions | High (for simple lists) | Moderate (prone to errors with variations) | Simple, well-structured lists |

| DOM Parsing | Moderate | High (handles variations better) | Complex, poorly structured lists |

| XPath | Moderate | High (precise targeting of elements) | Complex lists with well-defined structure |

Ethical Considerations and Legal Implications

Responsible data scraping involves adhering to ethical guidelines and legal frameworks. Respecting website owners’ wishes and avoiding actions that could harm their websites is paramount.

Ethical Implications of Using List Crawlers

Overloading websites with requests, scraping sensitive data without permission, and using the data for unethical purposes are major ethical concerns. Responsible scraping prioritizes minimizing impact on the target website and using the data ethically.

Potential Legal Issues Related to Data Scraping

Unauthorized data scraping can lead to legal action under copyright, terms of service violations, and data privacy regulations. Understanding and complying with relevant laws is crucial.

Respecting robots.txt Directives and Website Terms of Service

Always check the `robots.txt` file of a website to determine which parts are allowed to be scraped. Adhering to a website’s terms of service is essential to avoid legal repercussions.

Best Practices for Responsible Data Scraping, List crowler

- Respect `robots.txt`

- Adhere to website terms of service

- Minimize requests to avoid overloading the website

- Use polite scraping techniques (e.g., delays between requests)

- Clearly identify yourself and your purpose

- Handle data responsibly and ethically

Applications and Use Cases

List crawlers find wide applications across various fields, significantly enhancing data collection and analysis capabilities.

List Crawler Applications in Different Fields

E-commerce price comparison, research data collection (academic papers, market reports), job market analysis, real estate data aggregation, and social media sentiment analysis are just a few examples. The ability to automate data extraction from lists saves time and resources, allowing for more efficient analysis.

Building a Database of Product Information Using a List Crawler

A list crawler can extract product names, descriptions, prices, and other relevant attributes from e-commerce websites. This data can then be stored in a structured database for analysis, price comparison, or building a product catalog.

Hypothetical Scenario Illustrating the Benefits of a List Crawler

Imagine a market research firm needing to analyze customer reviews for a specific product across multiple e-commerce platforms. A list crawler could efficiently gather these reviews, providing valuable insights into customer sentiment and product perception, far exceeding the capabilities of manual data collection.

Potential Applications of a List Crawler in Market Research

- Competitor price monitoring

- Customer sentiment analysis

- Product review aggregation

- Market trend identification

- Brand reputation monitoring

Technical Implementation Aspects

Building a robust list crawler requires careful consideration of programming languages, libraries, and error handling techniques.

Programming Languages for List Crawlers

Python and Java are commonly used due to their extensive libraries and robust frameworks for web scraping and data processing. Python’s ease of use and rich ecosystem of libraries like Beautiful Soup and Scrapy make it particularly popular.

Key Libraries and Tools

Python libraries such as Beautiful Soup (for HTML parsing), Scrapy (for building crawlers), and Requests (for making HTTP requests) are essential. Java libraries like Jsoup (for HTML parsing) and HttpClient (for making HTTP requests) provide similar functionalities.

Techniques for Handling Errors and Exceptions

Implementing robust error handling is crucial. Techniques include try-except blocks (Python) or try-catch blocks (Java) to gracefully handle network errors, invalid HTML, and other unexpected situations. Retrying failed requests with exponential backoff can improve resilience.

Find out about how warren times observer can deliver the best answers for your issues.

Pseudo-code Algorithm for a List Crawler Handling Pagination

Algorithm ListCrawler(URL):

Initialize data storage

while URL != NULL:

Fetch webpage from URL

Parse HTML and extract list items

Store extracted data

Find next page URL (if pagination exists)

Update URL

return data storage

Performance Optimization

Optimizing a list crawler for speed and efficiency is crucial, especially when dealing with large datasets or high-traffic websites.

Strategies for Improving Crawler Speed and Efficiency

Techniques include using asynchronous requests, employing caching mechanisms to avoid redundant requests, optimizing database interactions, and employing efficient data structures for storing and processing extracted data. Careful consideration of the target website’s structure and response times is also crucial.

Managing Network Requests and Avoiding Website Overloading

Implementing delays between requests, using a rotating proxy server pool to distribute the load, and respecting the website’s `robots.txt` file are essential for avoiding overloading the target website and potential bans.

Best Practices for Handling Large Datasets

Employing techniques such as data streaming, chunking, and parallel processing is vital for efficiently managing large datasets. Utilizing efficient database systems and data structures is crucial.

Comparison of Optimization Techniques and Their Impact on Performance

| Technique | Impact on Speed | Impact on Reliability | Complexity |

|---|---|---|---|

| Asynchronous Requests | High | Moderate | Moderate |

| Caching | High | High | Low |

| Database Optimization | High | High | High |

| Data Streaming | High | High | High |

Visualization and Presentation of Extracted Data

Effective data visualization is crucial for communicating insights derived from extracted list data.

Methods for Visualizing Extracted Data

Bar charts, line graphs, scatter plots, pie charts, and maps can be used depending on the nature of the data. Interactive dashboards can provide a dynamic and engaging way to explore the data.

Effective Ways to Present Extracted Data to Different Audiences

Tailoring the visualization and presentation style to the audience’s technical expertise and interests is crucial. Simple charts and clear summaries are best for non-technical audiences, while more complex visualizations and detailed analyses are suitable for technical audiences.

Visual Representation of Product Prices

A bar chart could effectively represent product prices, with product names on the x-axis and prices on the y-axis. The height of each bar would correspond to the price, allowing for easy comparison of prices across different products. Color-coding could further highlight price ranges or categories.

How Different Data Visualizations Highlight Different Aspects of Extracted Data

Bar charts effectively show comparisons between categories. Line graphs illustrate trends over time. Scatter plots reveal correlations between variables. Pie charts represent proportions of a whole. Choosing the appropriate visualization method depends on the specific insights you want to highlight.

In conclusion, list crawlers offer a powerful means of gathering valuable data from the web, providing insights across numerous fields. However, responsible use is paramount. By understanding the technical aspects, ethical implications, and legal considerations discussed here, developers and researchers can harness the power of list crawlers while upholding ethical standards and respecting website terms of service. The ability to efficiently extract, analyze, and visualize this data opens up new opportunities for informed decision-making and innovative applications across various industries.

Quick FAQs

What are the limitations of list crawlers?

List crawlers can be limited by website design inconsistencies, dynamic content loading (JavaScript), CAPTCHAs, and rate limits imposed by websites to prevent abuse.

How can I avoid getting blocked by websites while using a list crawler?

Respect robots.txt, implement delays between requests, use a rotating IP address pool, and be mindful of the website’s server load. Always review and adhere to the website’s terms of service.

What programming languages are best suited for building list crawlers?

Python, with its rich ecosystem of libraries like Beautiful Soup and Scrapy, is a popular choice. Java is another strong contender, offering scalability and robustness.

How do I handle pagination when building a list crawler?

Identify the pattern in the website’s pagination URLs (e.g., page numbers in the URL). Your crawler should automatically detect and follow these links to retrieve data from subsequent pages.