List vrawler, a powerful tool for data extraction, offers both immense potential and significant challenges. This guide delves into the intricacies of list vrawling, exploring its technical aspects, ethical considerations, and practical applications across various industries. We’ll examine different methods, address potential legal pitfalls, and explore best practices for responsible data collection. Whether you’re a developer, researcher, or business professional, understanding list vrawling is crucial in today’s data-driven world.

From defining the core concept and exploring its diverse uses to delving into the technicalities of implementation and addressing crucial ethical and legal considerations, this guide aims to provide a holistic understanding of list vrawling. We’ll cover advanced techniques, security measures, and future trends, equipping you with the knowledge to utilize this powerful tool responsibly and effectively.

Understanding List Vrawlers

List vrawlers are automated programs designed to extract specific data from online lists. They systematically navigate websites and APIs, identifying and retrieving target information, often in bulk. This process streamlines data acquisition for various applications, but requires careful consideration of ethical and legal implications.

Defining “List Vrawler”

A list vrawler is a software application that automatically extracts data from online lists. Its primary function is to efficiently collect structured information, such as email addresses, product details, or contact information, from various online sources. These lists can range from simple, static HTML lists to complex, dynamically generated lists found within web applications.

Do not overlook explore the latest data about craigslist wisconsin.

List vrawlers can target a wide variety of lists, including those found on websites, within APIs, or even in downloadable files. Examples include product catalogs, contact lists, email directories, job postings, research papers, and social media profiles. The specific types of lists targeted depend entirely on the vrawler’s purpose and configuration.

The applications of list vrawlers are diverse and span multiple industries. Marketing and sales teams use them for lead generation and customer relationship management. Recruiters utilize them for candidate sourcing. Researchers employ them for data collection and analysis. E-commerce businesses leverage them for price comparison and competitive analysis.

Technical Aspects of List Vrawling, List vrawler

List vrawling relies on several key technologies and techniques. Web scraping libraries, such as Beautiful Soup (Python) or Cheerio (Node.js), parse HTML and XML to extract data. APIs, when available, offer a more structured and efficient approach. Regular expressions are used to identify patterns within the data. The process often involves HTTP requests to fetch web pages, followed by data extraction and cleaning.

Different list vrawling methods exist, each with its advantages and disadvantages. Web scraping directly from websites is widely used but can be fragile due to website changes. API-based extraction is more reliable but requires API access and might have rate limits. Hybrid approaches, combining both methods, are often the most robust.

A basic list vrawler algorithm typically involves these steps: 1. Fetching the target URL; 2. Parsing the HTML/XML content; 3. Identifying and extracting the target data using regular expressions or XPath; 4. Cleaning and formatting the extracted data; 5.

Storing the data in a database or file. This process is iterative, repeating for multiple URLs or pages within a list.

- Fetch target URL

- Parse HTML/XML

- Extract data using regex or XPath

- Clean and format data

- Store data

- Repeat for multiple URLs/pages

Ethical and Legal Considerations

Ethical list vrawling prioritizes respect for website terms of service, data privacy, and user consent. Scraping data without permission, violating robots.txt directives, or collecting sensitive personal information without consent raises serious ethical concerns.

Legal challenges include copyright infringement, violation of terms of service, and breaches of privacy laws like GDPR and CCPA. Companies can face legal action for unauthorized data scraping, leading to significant fines and reputational damage.

Responsible list vrawling practices include respecting robots.txt, adhering to website terms of service, obtaining explicit consent when collecting personal data, and implementing measures to protect user privacy. Rate limiting requests and using polite scraping techniques are crucial.

List Vrawler Implementation and Examples

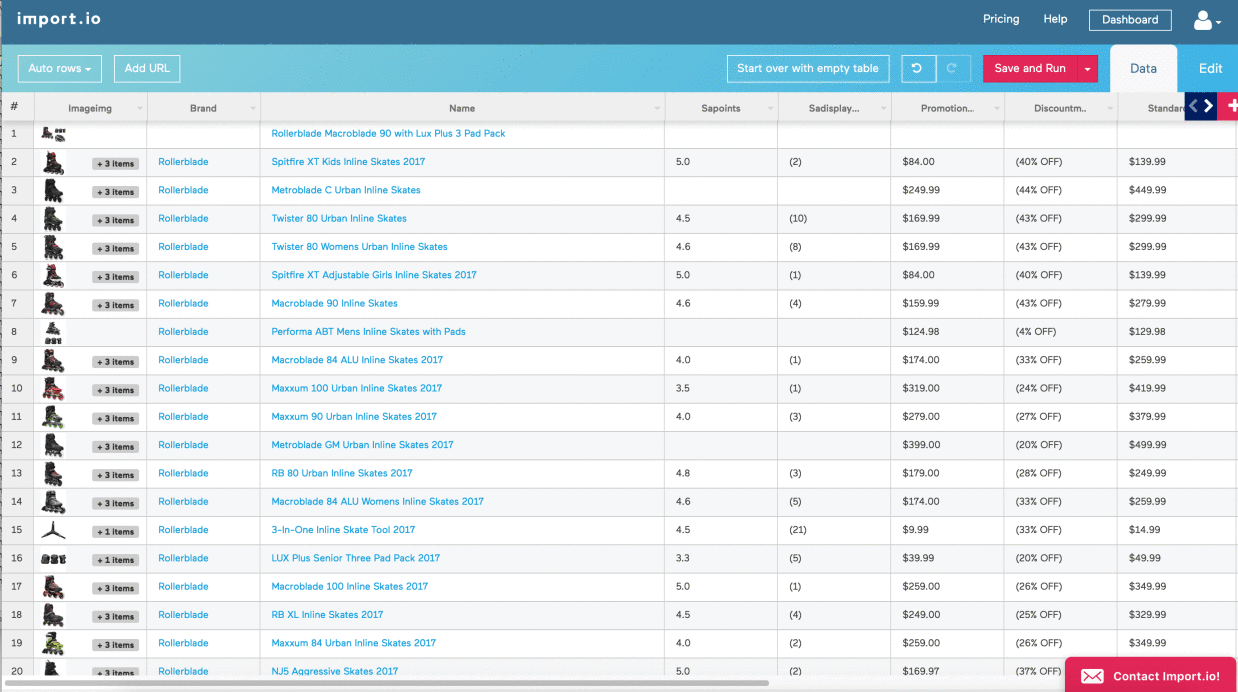

List vrawlers can extract data from various online sources. Below is a hypothetical table illustrating different scenarios:

| Source | Data Type | Extraction Method | Ethical Considerations |

|---|---|---|---|

| E-commerce website | Product prices and descriptions | Web scraping using Beautiful Soup | Respect robots.txt, rate limit requests |

| Publicly available API | Weather data | API calls | Adhere to API usage guidelines |

| Job board website | Job postings | Web scraping with XPath | Check terms of service, avoid overloading the server |

| Social media platform (public profiles) | Usernames and profile information | Web scraping with specific selectors | Respect privacy settings, avoid violating terms of service |

Collecting email addresses from a public website (hypothetical): A list vrawler could identify email address patterns within the HTML source code using regular expressions. However, this must comply with the website’s terms of service and respect user privacy.

Hypothetical Case Study: A marketing firm used a list vrawler to collect contact information from a list of potential clients on a business directory website. This streamlined their lead generation process, resulting in a 30% increase in qualified leads within the first quarter.

- Problem: Inefficient manual lead generation.

- Solution: Developed a list vrawler to extract contact details.

- Results: 30% increase in qualified leads.

Advanced List Vrawling Techniques

Handling dynamic websites and JavaScript-rendered content requires techniques like using headless browsers (e.g., Selenium or Puppeteer) to render the page and then extract data. These browsers simulate a real user’s interaction, allowing access to dynamically loaded content.

Optimizing speed and efficiency involves techniques like asynchronous requests, caching, and efficient data processing. Proper error handling, including retry mechanisms and exception management, ensures robustness.

Different approaches exist for handling errors. Retry mechanisms allow the vrawler to attempt failed requests again after a delay. Robust exception handling prevents crashes and allows for logging and reporting of errors. Careful error handling ensures the vrawler’s resilience.

Security Considerations for List Vrawlers

Security risks include unauthorized access, data breaches, and denial-of-service attacks. Protecting against these threats requires secure coding practices, input validation, and proper authentication and authorization mechanisms.

Security measures include using strong passwords, encrypting sensitive data, implementing rate limiting to prevent abuse, and regular security audits. Using a secure framework and incorporating best practices in secure coding is essential.

A secure architecture for a list vrawler might incorporate robust authentication (e.g., OAuth 2.0), authorization based on user roles and permissions, and encryption of data both in transit and at rest. Regular security testing and updates are crucial for maintaining security.

Future Trends in List Vrawling

Future trends include the increasing use of AI and machine learning to improve data extraction accuracy and efficiency. More sophisticated techniques for handling dynamic content and complex websites are likely to emerge. A greater focus on ethical considerations and compliance with data privacy regulations is expected.

Advancements might involve improved algorithms for handling complex data structures, more robust error handling, and better integration with cloud platforms. The development of tools and frameworks that simplify ethical and legal compliance will likely become increasingly important.

Emerging challenges include the ongoing arms race between list vrawlers and websites employing anti-scraping measures. The need for more sophisticated techniques to bypass these measures while remaining ethically and legally compliant will continue to drive innovation. The increasing complexity of websites and the prevalence of dynamic content will pose ongoing challenges.

List vrawling presents a powerful capability for data acquisition, but responsible implementation is paramount. By understanding the technical intricacies, ethical implications, and legal frameworks surrounding list vrawling, we can harness its potential while mitigating risks. This guide has provided a foundational understanding, equipping you to navigate the complexities of this field and utilize list vrawling responsibly and effectively for your specific needs.

Remember to always prioritize ethical considerations and comply with relevant laws and regulations.

Top FAQs: List Vrawler

What are the limitations of list vrawlers?

List vrawlers can be limited by website structure, dynamic content (JavaScript rendering), anti-scraping measures implemented by websites, and rate limits imposed by servers. They may also struggle with unstructured or inconsistently formatted data.

How can I avoid legal issues when using a list vrawler?

Always respect website terms of service, robots.txt directives, and copyright laws. Avoid scraping personal data without explicit consent. Understand and comply with relevant data privacy regulations like GDPR and CCPA.

Are there free list vrawler tools available?

Some open-source libraries and tools are available, but they may require programming skills to utilize effectively. Many commercial solutions also exist, offering varying levels of functionality and support.

What is the difference between list vrawling and web scraping?

While often used interchangeably, list vrawling typically focuses on extracting specific lists or structured data from websites, whereas web scraping encompasses a broader range of data extraction techniques, including unstructured data.