Listcralwer – ListCrawler, a powerful tool for extracting data from online lists, opens up exciting possibilities for data analysis and informed decision-making. This comprehensive guide explores the intricacies of list crawlers, delving into their functionality, data extraction techniques, ethical considerations, and real-world applications across diverse industries. We’ll navigate the complexities of designing efficient list crawlers, overcoming anti-scraping measures, and ensuring responsible data acquisition.

Prepare to unlock the potential of structured web data.

From understanding the core functionalities of a list crawler and its differences from other web crawlers, to mastering data extraction methods and navigating legal and ethical considerations, this guide offers a practical and insightful journey into the world of list crawling. We’ll examine various data extraction libraries, discuss strategies for handling pagination and anti-scraping measures, and explore techniques for optimizing performance and scalability.

Through practical examples and detailed explanations, this guide empowers you to leverage the power of list crawlers effectively and responsibly.

List Crawlers: A Comprehensive Overview

List crawlers are specialized web scraping tools designed to efficiently extract data from web pages containing lists. They offer a targeted approach compared to general-purpose web crawlers, focusing on structured data presented in list formats. This article delves into the functionalities, techniques, ethical considerations, and applications of list crawlers, providing a comprehensive understanding of this powerful data extraction tool.

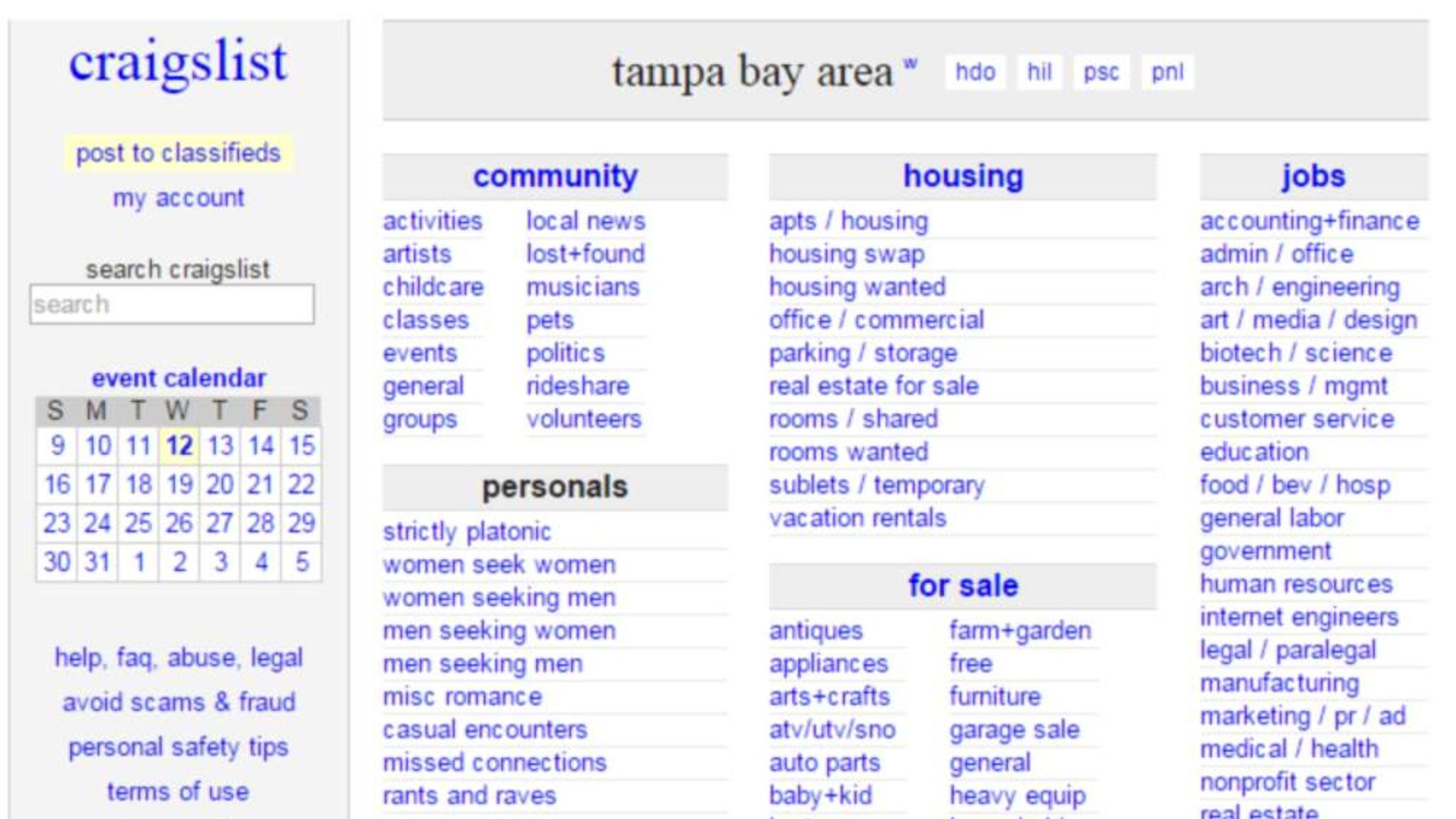

You also can understand valuable knowledge by exploring craigslist orange county.

Definition and Functionality of List Crawlers, Listcralwer

A list crawler is a program that systematically navigates websites to identify and extract data organized in list formats (e.g., unordered lists, ordered lists, tables). Unlike general web crawlers that explore entire websites, list crawlers focus solely on extracting data from lists, making them highly efficient for specific data extraction tasks.

Core functionalities include identifying list elements on a webpage using HTML parsing techniques, extracting data from individual list items, handling pagination to access multiple pages of list data, and managing the extracted data in a structured format (e.g., CSV, JSON). They differ from general web crawlers in their targeted approach and specialized algorithms optimized for list structures. The efficiency of list crawler architectures varies; a breadth-first search might be suitable for shallow lists across many pages, while a depth-first search may be better for deep lists on fewer pages.

The choice depends on the specific target website and data structure.

A simple flowchart illustrating the process of list crawling would begin with identifying the target URL, followed by fetching the webpage content. Next, the crawler would parse the HTML to locate list elements, extract data from each list item, handle pagination if necessary, and finally, store the extracted data in a designated format. Error handling and retry mechanisms are incorporated throughout the process.

Data Extraction Techniques Employed by List Crawlers

List crawlers employ various methods to extract data from list-based web pages. These methods range from simple HTML parsing to sophisticated techniques leveraging regular expressions and machine learning.

Regular expressions are frequently used to identify and extract specific patterns within list items. For example, the regular expression \d+\.\s*(.*) could extract the text after a numbered item in an ordered list. Extracting data from dynamically loaded lists presents challenges, as the data may not be immediately available in the initial HTML source. Techniques such as waiting for JavaScript to execute fully or using browser automation tools are necessary to handle these scenarios.

Common data formats encountered include CSV, JSON, and XML, each offering different advantages in terms of structure and ease of processing.

| Library Name | Language | Strengths | Weaknesses |

|---|---|---|---|

| Beautiful Soup | Python | Easy to use, versatile, supports various parsers | Can be slower for very large datasets |

| Scrapy | Python | Highly scalable, efficient, built-in features for crawling and data extraction | Steeper learning curve than Beautiful Soup |

| Cheerio | JavaScript | Fast, jQuery-like syntax, well-integrated with Node.js | Less versatile than Beautiful Soup for complex HTML |

| Jsoup | Java | Robust, efficient, widely used in Java applications | Can be more verbose than Python libraries |

Ethical Considerations and Legal Implications

Ethical and legal considerations are paramount when employing list crawlers. Respecting website terms of service, adhering to robots.txt directives, and avoiding overloading servers are crucial. Violating robots.txt rules can lead to legal repercussions, including being blocked from accessing the website. Data privacy is another critical aspect. Extracting personally identifiable information without consent is unethical and potentially illegal, depending on jurisdiction and data protection laws.

Responsible list crawling involves implementing strategies to minimize the impact on websites and respect user privacy.

- Always check the website’s robots.txt file before scraping.

- Respect the website’s terms of service and usage policies.

- Avoid overloading the website’s servers with excessive requests.

- Do not scrape personally identifiable information without consent.

- Implement delays between requests to avoid being detected as a bot.

- Use a user-agent string that identifies your crawler.

Applications and Use Cases of List Crawlers

List crawlers find widespread application across various industries. In e-commerce, they are used to track product prices, monitor competitor activity, and gather product information. Financial institutions use them to collect market data, track stock prices, and analyze financial news. Researchers utilize list crawlers to gather data for academic studies, while marketing professionals use them for market research and competitive analysis.

Price comparison websites heavily rely on list crawlers to aggregate product pricing data from multiple sources. Market research firms leverage them to collect data on consumer preferences, trends, and market sentiment.

Advanced Techniques and Challenges

Handling pagination, overcoming anti-scraping measures, and dealing with CAPTCHAs are common challenges in advanced list crawling. Pagination can be handled by detecting pagination links and iteratively fetching subsequent pages. Anti-scraping measures, such as IP blocking and CAPTCHAs, require sophisticated techniques to overcome. Maintaining data accuracy and reliability requires careful data validation and error handling.

- Error: Incorrectly parsing HTML leading to missing or inaccurate data. Solution: Implement robust error handling and data validation mechanisms.

- Error: Website changes causing the crawler to fail. Solution: Regularly update the crawler to adapt to changes in website structure.

- Error: Rate limiting or IP blocking by the target website. Solution: Implement delays between requests and use proxies to rotate IP addresses.

- Error: Handling dynamic content loaded via JavaScript. Solution: Use browser automation tools or headless browsers to render JavaScript and extract data.

Performance Optimization and Scalability

Optimizing list crawler performance and scalability involves efficient data handling, caching mechanisms, and robust system architecture. Efficient data handling minimizes memory usage and processing time. Caching mechanisms store previously fetched data, reducing the need to repeatedly fetch the same information. A scalable system architecture employs distributed crawling and parallel processing to handle large datasets and high request volumes. This might involve employing a message queue system to manage requests and distributing the workload across multiple machines.

The choice of database for storing extracted data is crucial, considering factors like data volume, query patterns, and performance requirements.

In conclusion, list crawlers represent a powerful yet responsible tool for harnessing the wealth of information available on the web. By understanding their capabilities, limitations, and ethical implications, we can utilize them to extract valuable insights and contribute to data-driven decision-making across numerous fields. Responsible development and deployment, adhering to ethical guidelines and legal frameworks, are crucial for maximizing the benefits of list crawlers while minimizing potential risks.

This guide serves as a foundation for further exploration and innovation in the exciting field of web data extraction.

Quick FAQs: Listcralwer

What are the limitations of list crawlers?

List crawlers can be limited by website design changes, anti-scraping measures, CAPTCHAs, and the speed and reliability of internet connections. Dynamically loaded content can also pose challenges.

How can I avoid legal issues when using a list crawler?

Always respect robots.txt rules, obtain explicit permission where necessary, and ensure compliance with data privacy regulations like GDPR and CCPA. Avoid overloading target websites.

What programming languages are commonly used for list crawling?

Python, with its rich libraries like Beautiful Soup and Scrapy, is a popular choice. Other languages like Java, JavaScript (Node.js), and Ruby are also used.

How do I handle pagination with a list crawler?

Techniques include identifying pagination links (e.g., “Next,” page numbers) and iteratively fetching data from subsequent pages until the end is reached.