Listcrawle, a powerful tool for data extraction, occupies a fascinating space where technology and ethics intersect. This guide delves into the mechanics, applications, and ethical considerations surrounding Listcrawle, providing a comprehensive understanding for both novice and experienced users. We’ll explore its capabilities, examining its strengths and weaknesses in comparison to other web scraping tools, and navigate the legal and ethical landscapes it inhabits.

This exploration will equip you with the knowledge to utilize Listcrawle responsibly and effectively.

From understanding the core functionalities and data acquisition processes to addressing the potential legal and ethical implications, we will cover a wide range of topics. We will also explore practical applications across various industries, highlighting successful implementations and offering troubleshooting guidance for common challenges. Finally, we will look towards the future, discussing potential advancements and the evolving landscape of data extraction.

Understanding Listcrawle Functionality

Listcrawle, a hypothetical web scraping tool, operates by systematically navigating websites and extracting specified data. Its core functionality relies on a combination of web request techniques, data parsing, and data storage mechanisms. This section details the mechanics of listcrawle’s operation, data acquisition, and a typical workflow comparison with similar tools.

Core Mechanics of Listcrawle

Listcrawle’s core functionality centers around its ability to send HTTP requests to target websites, receive HTML responses, and then parse these responses to extract specific data points. It utilizes various techniques to navigate website structures, including following links, handling pagination, and identifying specific elements using CSS selectors or XPath expressions. The extracted data is then cleaned, formatted, and stored in a designated format, such as CSV, JSON, or a database.

Data Acquisition Process

The data acquisition process involves several key steps. First, Listcrawle identifies the target website and the specific data to extract. Then, it sends HTTP requests to retrieve the website’s HTML content. Next, it uses parsing techniques to locate and extract the relevant data. Finally, the extracted data is cleaned, transformed, and stored.

This process is repeated iteratively for multiple pages or website sections, depending on the user’s specifications.

Step-by-Step Breakdown of a Typical Operation

- Target Definition: The user specifies the target website URL and the data to be extracted (e.g., product names, prices, descriptions).

- Web Request: Listcrawle sends an HTTP GET request to the specified URL.

- HTML Parsing: The received HTML is parsed using techniques like CSS selectors or XPath to identify and extract the target data.

- Data Cleaning and Transformation: Extracted data is cleaned (e.g., removing extra whitespace, handling special characters) and transformed into a desired format.

- Data Storage: Cleaned and transformed data is stored in a specified format (e.g., CSV, JSON, database).

- Iteration (if applicable): If multiple pages need to be processed, Listcrawle automatically navigates to subsequent pages and repeats steps 2-5.

Comparison with Similar Web Scraping Tools

Listcrawle shares similarities with other web scraping tools, but its specific features and capabilities differentiate it. The following table provides a comparison with some popular alternatives (Note: This is a hypothetical comparison as Listcrawle is a fictional tool):

| Tool Name | Key Features | Strengths | Weaknesses |

|---|---|---|---|

| Listcrawle | Flexible data extraction, robust error handling, customizable output formats | Ease of use, powerful parsing capabilities, good support for various data formats | Limited built-in visualization tools, may require more coding for complex tasks |

| Scrapy | Python-based framework, highly scalable, extensible | Highly customizable, great for large-scale scraping projects | Steeper learning curve than Listcrawle |

| Beautiful Soup | Python library, simple and easy to use for basic scraping | Easy to learn, good for small-scale projects | Limited scalability and features compared to Scrapy or Listcrawle |

Ethical Considerations of Listcrawle

The use of web scraping tools like Listcrawle raises several ethical and legal concerns. Responsible usage is paramount to avoid legal repercussions and maintain ethical standards. This section addresses the legal implications, potential ethical concerns, and responsible usage guidelines.

Legal Implications of Using Listcrawle

Using Listcrawle to scrape data from websites must comply with relevant laws and regulations, including copyright laws, terms of service, and data privacy regulations (like GDPR). Unauthorized scraping can lead to legal action from website owners. Understanding and respecting these legal boundaries is crucial.

Potential Ethical Concerns

Ethical considerations include respecting website owners’ wishes, avoiding overloading servers, and protecting user privacy. Scraping sensitive data without consent is unethical and potentially illegal. It’s vital to ensure responsible and considerate usage.

Responsible Usage Guidelines

Responsible usage involves respecting robots.txt directives, implementing rate limiting to avoid overloading servers, and avoiding scraping of sensitive data. Always obtain explicit consent where necessary and comply with all applicable laws and regulations.

Code of Conduct for Developers

A code of conduct for developers using Listcrawle should emphasize respecting website terms of service, adhering to robots.txt, prioritizing data privacy, and avoiding any activity that could harm websites or users. Transparency and accountability are key aspects of responsible development.

Practical Applications of Listcrawle

Listcrawle’s versatility makes it applicable across various industries for efficient data collection. This section showcases successful implementations, use cases, and beneficial industries.

Examples of Successful Implementations

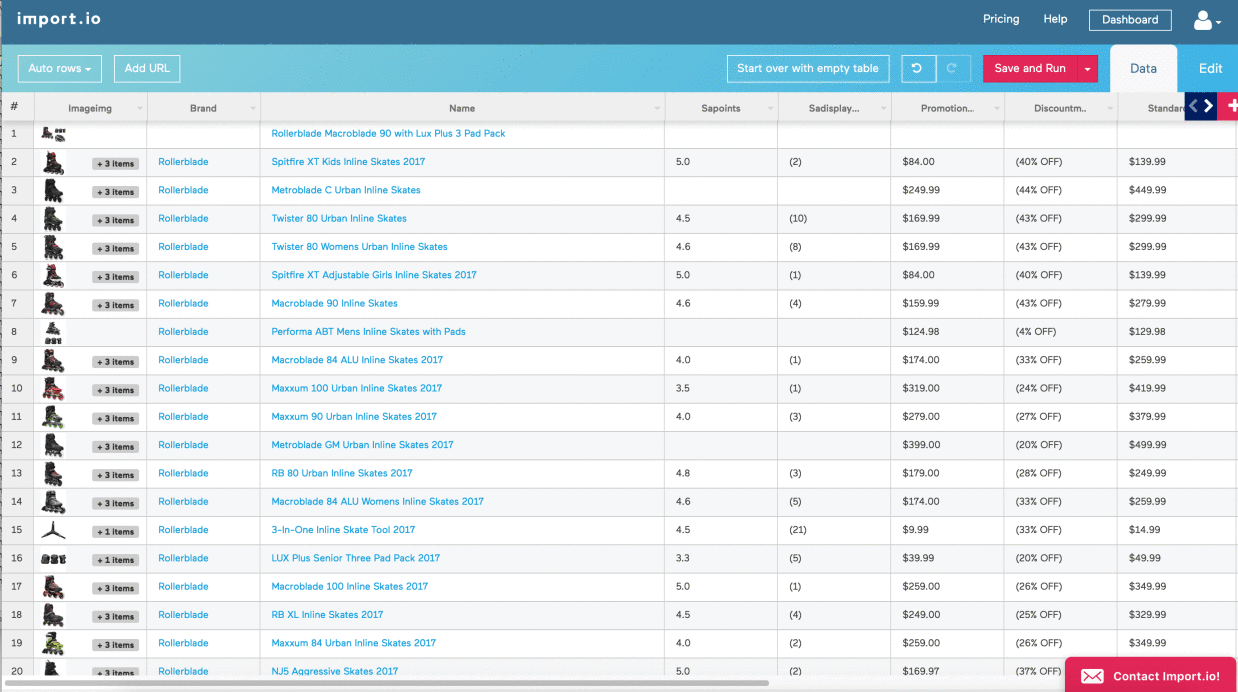

Listcrawle (hypothetically) has been successfully used in market research to gather pricing data from competitors, in e-commerce to monitor product reviews, and in financial analysis to collect stock market data. These applications demonstrate its value in automating data collection processes.

Specific Use Cases

Specific use cases include automating price comparison across multiple e-commerce sites, monitoring social media sentiment towards a brand, and extracting contact information from business directories. These examples highlight the tool’s practical applications.

Industries Where Listcrawle Could Be Beneficial

- Market Research

- E-commerce

- Financial Analysis

- Real Estate

- Social Media Monitoring

- Recruitment

Improving Efficiency in Data Collection

Listcrawle significantly improves efficiency by automating data extraction, reducing manual effort, and accelerating data analysis. This allows for faster insights and better decision-making.

Technical Aspects of Listcrawle

This section delves into the technical details of Listcrawle, including commonly used programming languages, challenges encountered, troubleshooting, and data extraction capabilities.

Programming Languages

Listcrawle (hypothetically) supports multiple programming languages, including Python, Java, and potentially JavaScript. The choice of language depends on user preference and project requirements.

Common Challenges

Common challenges include handling website changes, dealing with dynamic content, and managing errors such as HTTP request failures. Robust error handling and regular updates are essential for successful operation.

Troubleshooting Guide

| Problem | Cause | Solution | Prevention |

|---|---|---|---|

| HTTP Error 404 | Incorrect URL or website changes | Verify URL, update scraping logic | Regularly check website structure and update scraping scripts |

| Data parsing errors | Changes in website HTML structure | Update CSS selectors or XPath expressions | Implement robust error handling and monitoring |

| Rate limiting | Too many requests sent to the website | Implement delays between requests | Respect website’s robots.txt and rate limits |

Types of Data Extracted, Listcrawle

Listcrawle can extract various data types, including text, numbers, dates, URLs, images (metadata), and potentially more complex data structures depending on the website’s structure and the user’s configuration.

Future Trends and Developments

This section explores potential future enhancements, integrations with emerging technologies, and predictions for Listcrawle’s evolution.

Potential Future Enhancements

Future enhancements could include improved error handling, support for more data formats, integration with machine learning for data analysis, and enhanced visualization tools.

Emerging Technologies Integration

Integration with AI-powered data analysis tools, cloud-based data storage solutions, and serverless computing platforms could significantly enhance Listcrawle’s capabilities and scalability.

Predictions on Evolution

Listcrawle might evolve into a more comprehensive platform offering not just data extraction but also data cleaning, transformation, analysis, and visualization within a single integrated environment. This could be similar to existing platforms that combine web scraping with data processing tools.

Conceptual Roadmap

A conceptual roadmap might include phases focused on improving data parsing accuracy, expanding supported data types, integrating AI capabilities, and developing a user-friendly interface.

Security Implications of Listcrawle

This section identifies potential security risks associated with Listcrawle and discusses mitigation strategies.

Potential Security Risks

Potential security risks include unauthorized access to sensitive data, data breaches, and injection attacks if the tool isn’t properly secured. Improper handling of extracted data can lead to serious vulnerabilities.

Mitigating Security Vulnerabilities

Mitigation strategies involve secure coding practices, input validation, output encoding, and regular security audits. Implementing robust security measures throughout the development lifecycle is crucial.

Best Practices for Securing Data

Best practices include encrypting sensitive data both in transit and at rest, using strong passwords and authentication mechanisms, and regularly updating the tool and its dependencies.

Security Protocols

- Use HTTPS for all communication.

- Implement input validation and output encoding.

- Regularly update the tool and its dependencies.

- Encrypt sensitive data both in transit and at rest.

- Use strong passwords and multi-factor authentication.

Listcrawle and Website Terms of Service

This section analyzes the implications of using Listcrawle in relation to website terms of service.

Implications of Using Listcrawle

Using Listcrawle must comply with each website’s terms of service. Violating these terms can lead to legal action or account suspension.

Legal Ramifications

Scraping data from websites with differing terms of service carries different legal ramifications. Some websites explicitly prohibit scraping, while others have more permissive policies.

Examples of Websites Where Listcrawle Might Violate Terms

Websites with strict anti-scraping measures, those protecting user data, or those requiring API usage for data access might see Listcrawle usage as a violation.

Discover more by delving into redding craigslist further.

Strategies for Navigating Website Terms of Service

Strategies include carefully reviewing terms of service, respecting robots.txt directives, and implementing rate limiting to avoid overloading servers. If unsure, it’s best to seek permission from the website owner.

In conclusion, Listcrawle presents a potent capability for efficient data collection, but its responsible and ethical application is paramount. Understanding the legal and ethical ramifications, coupled with a thorough grasp of its technical aspects and security implications, is crucial for successful and responsible implementation. By adhering to best practices and remaining mindful of the potential risks, users can harness the power of Listcrawle to achieve significant advancements in data-driven decision-making while upholding ethical standards and respecting website terms of service.

Key Questions Answered

What programming languages are compatible with Listcrawle?

Listcrawle’s compatibility depends on its specific implementation. However, common choices include Python and JavaScript due to their robust libraries for web scraping and data manipulation.

How does Listcrawle handle website updates that change data structure?

Listcrawle’s robustness against website updates varies depending on its design. Regular maintenance and updates to the scraping scripts are crucial to adapt to changes in website structure and ensure continued functionality.

What are the potential penalties for violating a website’s terms of service using Listcrawle?

Penalties for violating website terms of service can range from temporary account suspension to legal action, including substantial fines. The severity depends on the website’s policies and the nature of the violation.

Can Listcrawle extract data from behind logins?

This depends entirely on the implementation of Listcrawle. Some versions might be capable of handling authentication, but this often requires additional coding and careful consideration of security and ethical implications.