Listcrawler, a term often associated with web scraping and data extraction, unveils a fascinating world of automated data collection. This exploration delves into the functionality of listcrawlers, examining their diverse applications and the ethical considerations surrounding their use. We’ll navigate the technical intricacies of listcrawler development, explore methods for detection and prevention, and analyze real-world examples to illustrate their impact.

Ultimately, understanding listcrawlers is crucial for both developers and website owners alike.

This guide provides a detailed overview of listcrawlers, covering their definition, functionality, ethical and legal implications, technical aspects of development, detection and prevention methods, and real-world case studies. We will compare and contrast listcrawlers with other data extraction techniques, providing practical examples and code snippets to enhance understanding. The aim is to offer a comprehensive resource for anyone interested in learning about or utilizing listcrawlers responsibly.

Listcrawlers: A Comprehensive Overview

Listcrawlers are automated programs designed to extract specific data from websites, typically focusing on lists or structured data. Unlike web scrapers that often target entire web pages, listcrawlers are more targeted, efficiently collecting specific information like email addresses, phone numbers, or product details. This article provides a detailed exploration of listcrawlers, covering their functionality, ethical and legal implications, technical aspects, detection methods, and real-world examples.

Definition and Functionality of Listcrawlers

A listcrawler’s core functionality centers on identifying and extracting data from lists presented on websites. This involves navigating website structure, identifying list elements (e.g., using HTML tags like <ul>, <ol>, or tables), and extracting the desired data points from within those elements. The process typically involves sending HTTP requests to the target website, parsing the HTML response, and extracting the relevant information.

Types of Listcrawlers and Their Applications

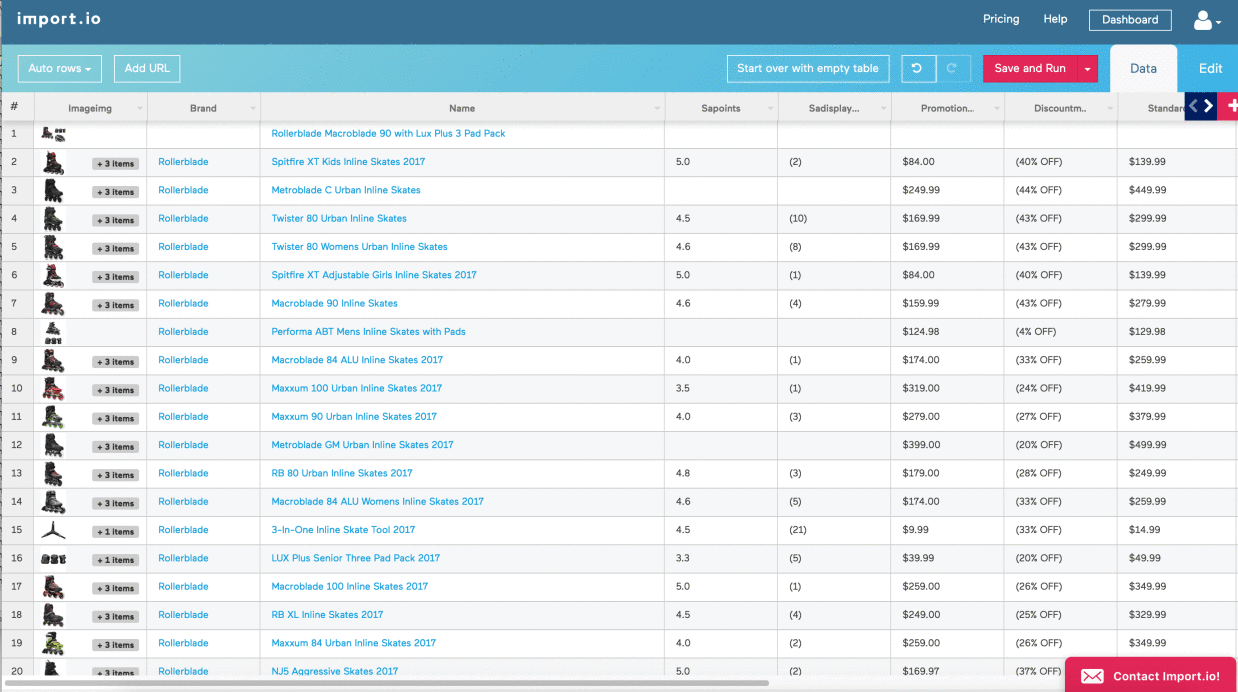

Listcrawlers can be categorized based on their target data and extraction methods. Some common types include those targeting email addresses (email harvesters), phone numbers, product details from e-commerce sites, and social media profiles. Applications range from market research and lead generation to price comparison and competitive analysis. For instance, a real estate company might use a listcrawler to gather contact information from property listing websites.

Examples of Targeted Websites and Data Sources

Common targets for listcrawlers include online directories, e-commerce platforms, job boards, and social media websites. Data commonly extracted includes contact information (names, emails, phone numbers), product details (prices, descriptions, reviews), and user profiles (biographical information, connections).

Listcrawlers vs. Web Scrapers

While both listcrawlers and web scrapers extract data from websites, they differ in their scope and approach. Web scrapers typically extract broader information from entire web pages, while listcrawlers focus specifically on structured lists. Listcrawlers are more efficient for targeted data extraction, while web scrapers provide a broader view of website content. However, both can be subject to similar ethical and legal considerations.

| Technique | Target | Method | Limitations |

|---|---|---|---|

| XPath | XML and HTML elements | Navigates the DOM using XPath expressions | Can be brittle; requires understanding of website structure |

| CSS Selectors | HTML elements | Selects elements based on CSS selectors | Similar to XPath, can be affected by website changes |

| Regular Expressions | Text patterns | Matches specific patterns within text data | Can be complex for intricate patterns; may produce false positives |

| API Access (if available) | Structured data | Utilizes the website’s API for data retrieval | Relies on API availability and rate limits |

Ethical and Legal Implications of Listcrawling

Ethical listcrawling requires respecting website terms of service, respecting robots.txt directives, and avoiding the collection of personally identifiable information without consent. Legal ramifications can include copyright infringement, violation of terms of service, and breaches of privacy laws. Responsible listcrawling emphasizes data privacy, informed consent, and compliance with relevant regulations.

A Hypothetical Legal Dispute Involving a Listcrawler

Imagine a company using a listcrawler to harvest email addresses from a competitor’s website, violating their terms of service and potentially privacy laws. The competitor could sue for damages, claiming loss of business due to unauthorized data collection and potential misuse of customer information. This highlights the importance of adhering to legal and ethical guidelines.

Best Practices for Ethical Listcrawling

- Respect robots.txt directives

- Obtain explicit consent for data collection

- Avoid overloading target websites

- Comply with all applicable laws and regulations

- Use data responsibly and ethically

Technical Aspects of Listcrawler Development

Listcrawler development often involves programming languages like Python (with libraries such as Beautiful Soup and Scrapy) and JavaScript (with Node.js and Puppeteer). Building a basic listcrawler involves defining the target website, identifying the data to extract, crafting appropriate selectors (XPath or CSS), sending HTTP requests, parsing the HTML response, and storing the extracted data. Challenges include handling dynamic content, website updates, and anti-scraping measures.

Handling HTTP Response Codes

Listcrawlers must handle various HTTP response codes effectively. A successful request (200 OK) allows for data extraction. Error codes (e.g., 404 Not Found, 500 Internal Server Error) require appropriate error handling and potentially retries. Rate limiting (429 Too Many Requests) necessitates implementing delays or other strategies to avoid being blocked.

Code Snippet Example (Python – Pseudocode)

This simplified example demonstrates the core logic:

#Import necessary libraries#Define target URL#Send HTTP request#Parse HTML response#Use CSS selectors or XPath to find list elements#Extract data from list elements#Store data (e.g., in a CSV file)

Detecting and Preventing Listcrawling, Listcrawler

Website owners employ various techniques to detect listcrawling attempts, including monitoring HTTP requests, analyzing user-agent strings, and identifying unusual access patterns. Mitigating the impact involves rate limiting, implementing CAPTCHAs, using robots.txt, and employing IP blocking. A robust system might combine multiple techniques to create layers of defense.

Anti-Listcrawling Security Best Practices

- Implement rate limiting

- Use CAPTCHAs or other authentication mechanisms

- Regularly update robots.txt

- Monitor website logs for suspicious activity

- Employ IP blocking and other security measures

Case Studies and Real-World Examples

One example involves a large e-commerce website that experienced a significant listcrawling incident, leading to the unauthorized collection of thousands of customer email addresses. This resulted in a data breach, reputational damage, and legal action. Another case study might focus on a news website that implemented advanced anti-scraping measures, effectively reducing the impact of listcrawlers while still providing access to legitimate users.

Lessons Learned from Listcrawling Incidents

- Proactive security measures are crucial

- Regular monitoring and logging are essential

- Robust anti-scraping techniques are needed

- Legal and ethical considerations must be addressed

- User privacy should be prioritized

In conclusion, the world of listcrawlers presents a complex landscape where technological capabilities intersect with ethical and legal considerations. While listcrawlers offer powerful tools for data acquisition, responsible development and deployment are paramount. By understanding the technical aspects, ethical implications, and potential legal ramifications, we can harness the power of listcrawlers for beneficial purposes while mitigating potential risks. This guide has served as a starting point for a deeper exploration of this multifaceted topic, encouraging further investigation and responsible practice.

FAQ

What is the difference between a listcrawler and a web scraper?

While both extract data, listcrawlers specifically target lists or structured data within websites, whereas web scrapers can handle more unstructured data and broader website navigation.

Are all listcrawlers illegal?

No. Listcrawlers are only illegal if they violate terms of service, copyright laws, or privacy regulations. Responsible use, respecting robots.txt and obtaining consent where necessary, is crucial.

What are some common challenges in listcrawler development?

Common challenges include handling dynamic content, bypassing anti-scraping measures, managing rate limits, and ensuring data accuracy and consistency.

How can I protect my website from listcrawlers?

Implement robust security measures such as rate limiting, CAPTCHAs, robots.txt, and IP blocking. Regularly monitor website traffic for suspicious activity.